Personal Information

Name: Pinank Solanki

IRC nick: psolanki

Email: solankipinank10@gmail.com

Github: https://github.com/ps2611

Time Zone: GMT+0530

Project Details

Overview

Rating facility is a fundamental part of any review system. So, the idea of the project is to add the rating feature to the present review system in CritiqueBrainz. The implementation of the idea is in accordance with discussions on CB-244 (JIRA) and IRC.

The project will provide users ability to rate entities on a scale of 10. Furthermore, it will also allow to review just via rating i.e no text needed (CB-247).

Goals and Deliverables

1. Implement a rating system on a scale of 10:

a) Extension of the current review database to store ratings.

b) Implementation of CB-247.

c) Addition of rating scale along with other functionalities.

d) Extension of the current revision system.

2. Add a page to show all reviews for a particular entity.

3. Necessary changes to UI relevant to the implemented rating system:

a) Entity info. page

- Display average rating

- Display link to the page for showing all reviews of an entity

b) Entity review page

- Display average rating

- Display rating by the author of review

Implementation Details

I have proposed rating on a scale of 10 and there’s a specific reason for this. Rating out of 10 provides perfect division of scale into discrete levels required for effective rating system. Although, if the community decides to have a scale of 5, I will be happy to implement that.

The other details of the project are as follows.

1a) Extension of the current review database to store ratings

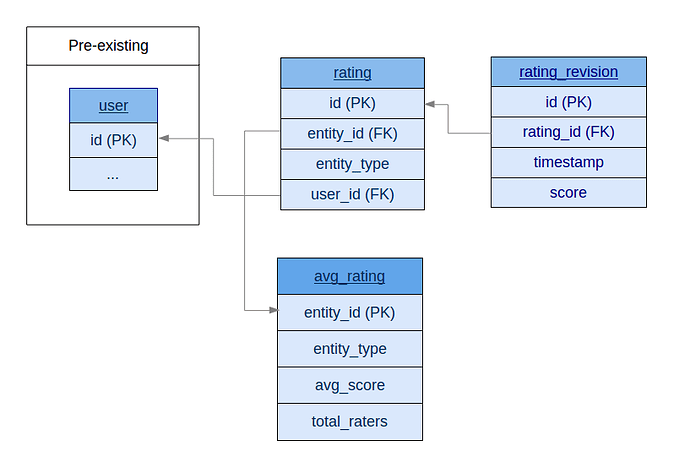

First of all, there will be a need to introduce three new tables to the existing schema viz. rating, rating_revision and avg_rating.

- rating - to store details of user and entity

- rating_revision - to store score and date of every revision

- avg_rating - to store average score of an entity

The schema for this is shown below.

1b) Implementation of CB-247

Rating will be an integral part of the review system and it needs to be independent. So, the user must be able to review an entity just by providing a rating.The proposed schema is designed accordingly. Hence, review can be one of the following three types:

- Text ( Existing )

- Rating

- Text + Rating

The details of the implementation are given below in the 1c - reviewing an entity section.

1c) Addition of rating scale along with other functionalities

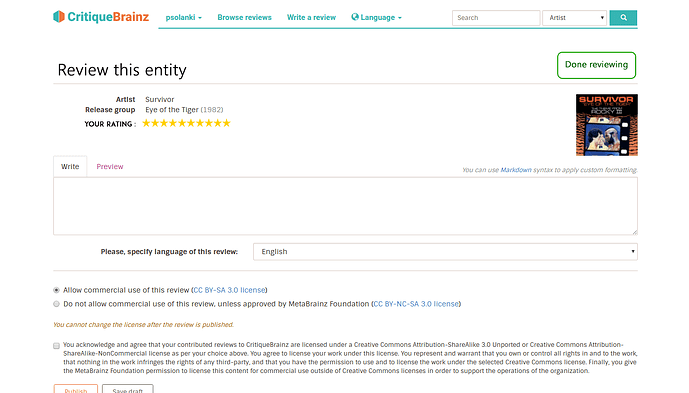

For users to set a rating, rating scale will be added on review and editing review page as shown in the images below. The suggested and proposed system uses stars as marking points. However, it can be something simpler like linear scale as well.

● Reviewing an entity :

Done reviewing: A new button will be introduced with a functionality to complete the user’s review process the moment user feels he’s done reviewing. It’s working is explained below.

- User sets a rating and clicks the button, then he opted for ‘only rating’ kind of review. (CB-247)

- User publishes a text review (confirmation of publication will be shown on this page itself) and clicks the button, then he opted for ‘only text’ kind of review.

- User writes a review along with rating and clicks the button, then he opted for ‘text + rating’ king of review.

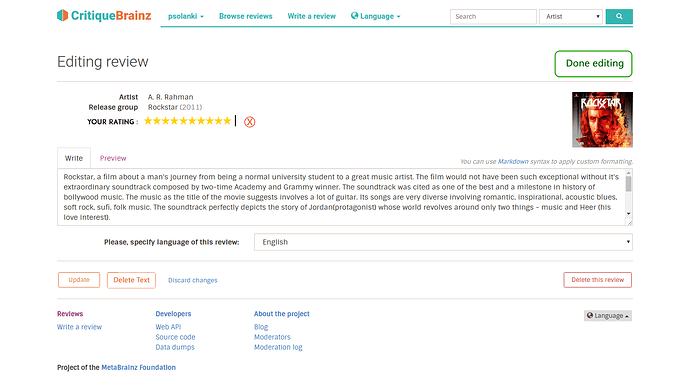

● Editing a review:

Done editing: A new button will be introduced with a functionality to complete the user’s editing process the moment user feels he’s done editing. It’s working is similar to the ‘Done reviewing’ functionality.

- If the review is ‘only rating’, the text editor will be displayed so that the user can update his review to ‘text + rating’.

- If the review is ‘only text’, the rating scale will be displayed just like on the review page shown above.

Delete this review: This will remove the entire review. But, what if the user wants to delete just rating or text. So, there is a need to introduce two new functionalities.

- Delete text: This will delete the text part of the review.

- Delete rating: This will be delete the rating part of the review.

(The buttons for these functionalities are shown in the image below)

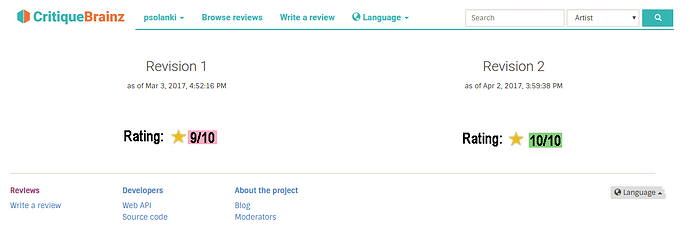

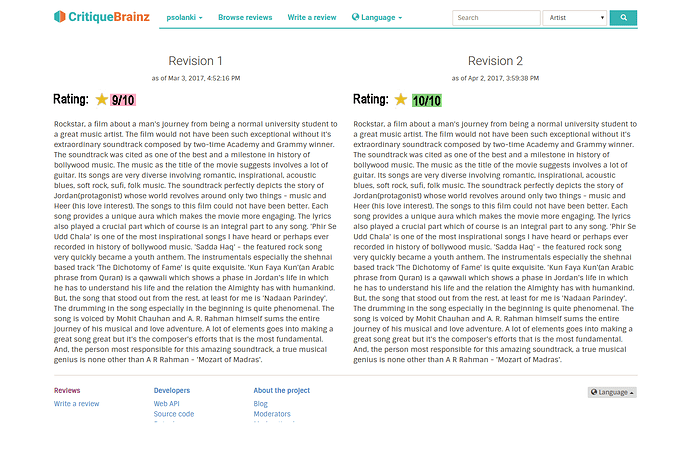

1d) Extension of current revision system

Current revision system can track changes in ‘Only text’ reviews. It will be extended to track revisions of the reviews with rating as well.

- The proposed schema supports revision system for ratings exactly similar to what CritiqueBrainz currently has for text reviews.

- For ‘text + rating’ reviews, the ‘id’ column in ‘rating’ and ‘review’ tables will be kept equal so that the text and rating refer to the same review. This will be ensured during the formation and editing of the review.

● Revisions for ‘only rating’ reviews

The UI mock-up to to view changes is shown on the next page.

● **Revisions for ‘text + rating’ reviews **

- Changes to either of text or rating will be considered as a different revision of the review.

- Also, there is case when user decides to update his review by providing rating to a text review or text to a rating review. In such situations, the updation will be a different revision.

The UI mock-up to view changes is shown below

Note: The above shown images of viewing changes in different revisions are just to give a proposition of how it can be implemented. A pop-up modal would be better and elegant.

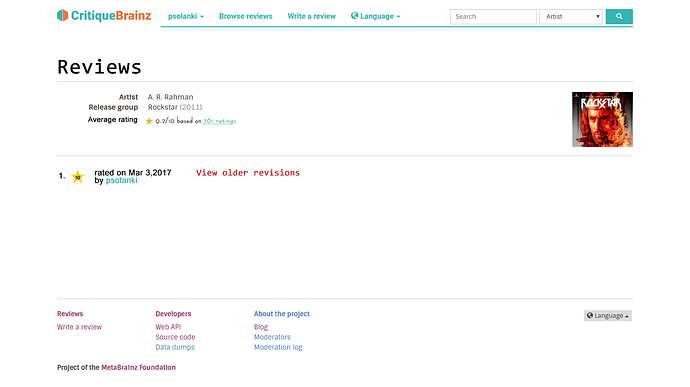

2) Add a page to show all the reviews for a particular entity

This page facilitates the viewing of all the reviews for a particular entity. UI mock-up is shown below.

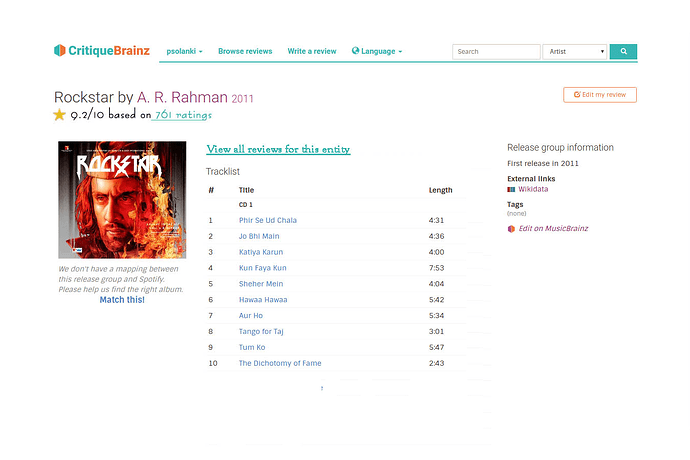

3a) Entity information page after UI changes

- Average rating will be shown just below the title of an entity.

- It will be necessary to remove the existing review showing section after adding the rating system. Also, it overshadowed the tracklist when there were many reviews. A link to the page for viewing all reviews will be added in place.

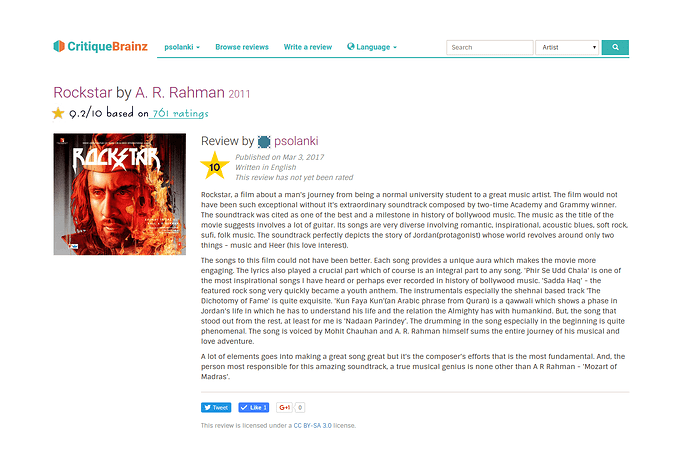

3b) Entity review page after UI changes

Note: All the UI mock-ups depicted in the proposal are mere representations of how I envision the changes to be incorporated that suits the current design of the site. There may well be a better suggestion and final implementation at completion of the project could be different.

Timeline

Community Bonding ( till May 15 )

Improve further understanding of the codebase by solving various tickets. Be in constant touch with the mentor to discuss and finalize specifications and any modifications needed in the roadmap.

May 15 - May 31

Create data-model modules

June 1 - June 10

No work because of university semester-end exams.

June 10 - June 25

Write test scripts for the modules created.

Start work on integration of completed backend work with proposed frontend for writing and editing review.

June 26 - June 30

Testing and resolving problems.

1st term evaluations.

July 1 - July 15

Integration part will extend into this period and complete it.

Complete extension of revision system with proposed UI or modal pop-up for viewing changes.

July 16 - July 23

Add page for viewing all the reviews.

Complete UI changes on entity information and review page.

July 24 - July 31

Catch up with tasks if not completed.

Extensively test UI changes for all devices.

2nd term evaluations.

Aug 1 - Aug 10

Testing, code clean-up and review all completed tasks

Aug 11 - Aug 20

Update concerned parts of documentation.

Buffer Time for any unpredictable delay.

Aug 21 - Aug 29

Final code submission and evaluations.

Detailed Information about myself

I am a Computer Science and Engineering undergraduate at Indian Institute of Technology (IIT), Mandi. I came to know about open source and GSoC from a senior of mine and loved it. Being a data enthusiast, I came across MetaBrainz and liked how many different projects are combined.

1. Tell us about the computer(s) you have available for working on your SoC project!

I have an HP Notebook 15-ac026tx. It has an intel i5 processor and 4GB RAM. I currently use Ubuntu 16.04 as my OS.

2. When did you first start programming?

I started programming during my higher secondary (11th grade) learning mostly HTML/CSS and C/C++ for algorithmic problems. I first used Python in my first year of college.

3. What type of music do you listen to?

I mostly listen to Indian music and instrumentals. A. R. Rahman is my favourite composer. I also listen to likes of Owl City, Hans Zimmer. Some of my favourites compositions are listed below

Rockstar (6a5540c5-dd93-43b4-a294-6515756bda98)

Rang De Basanti (9071a6ff-8b78-33c7-8c6e-77654c8c3626)

Eye of the Tiger (c0474f4e-6c19 -4075-a3c3-05b20399b0bb).

4. What aspects of the project CritiqueBrainz interest you the most?

I have always liked to assess things whether it be movies, music or food places before trying them out. It’s not that I don’t like to try new stuff but I feel it’s better to have a heads up in order to save time and frustration. And, who doesn’t like to be his own critic. So, what else could have better than CB.

5. Have you ever used MusicBrainz to tag your files?

No, but I will definitely try it out.

6. Have you contributed to other Open Source projects? If so, which projects and can we see some of your code? If you have not contributed to open source projects, do you have other code we can look at?

I am fairly new to the open source world but I have been an active member of my college’s programming society. I have built and maintained websites, blogs and Q&A forums for my university.

I am a recipient of Gold medal at Inter-IIT Tech Meet 2017 for the event Stock Market Analysis. We built a program in python that extracts news articles relevant to the stock price change of a company using scraping, sentiment analysis etc.

7. What sorts of programming projects have you done on your own time?

Some of my previous projects are mentioned below.

- I built the game Hangman for terminal using C.

- I built a crawler-scraper using python and postgresql that crawls the site, extracts and stores the contents for full text search.

- I also have experience of programming in micro-controllers like Arduino and Raspberry Pi.

8. How much time do you have available, and how would you plan to use it?

I have no other commitments other than GSoC this Summers. I will dedicate 40-50 hours per week to my GSoC project. Only from June 1-10 (10 days into coding period), I would be busy for university exams.

9. Do you plan to have a job or study during the summer in conjunction with SoC?

No. And, no other concern except the one stated above.