Hi everyone,

I wanted to start a discussion around music recommendations on ListenBrainz, particularly around Troi and LB Radio, which currently recommend music based on similar users’ listening patterns and prompt-based blends (artist/tag/stats/recs). Both approaches are well understood and effective, but they share a common gap: neither provides per-track explanations for why a specific song was suggested. Recommendations today are either black-box (Spark CF) or prompt-driven (LB Radio) without a clear, inspectable reason attached to each track.

I’ve been working on a proof-of-concept recommender that adds a new, complementary recommendation strategy — one that is explainable (every track carries a machine-readable reason), uses collaboration as a discovery signal (who actually recorded with your favorites), and integrates directly into the existing ListenBrainz ecosystem as a Troi patch and an LB Radio stream. This isn’t a replacement for anything — it’s a new signal that runs automatically alongside existing recommendations, requiring no user intervention. This post is meant to open that discussion.

Motivation

Troi’s similar-user approach is strong, and LB Radio’s prompt-based blending is flexible — but neither explicitly models a few other aspects of listening behavior that many users intuitively care about. For example, some users enjoy going deeper into artists they already love, while others prefer discovering new artists connected indirectly to their taste through real-world collaborations. Some users value genre consistency, others value era, and others still prefer exploration driven by who actually recorded together.

More importantly, neither system currently tells the user why a particular track was recommended. There’s no “Recommended because this artist collaborated with two of your favorites” or “From an artist you love (Seedhe Maut).” Adding that transparency is a core goal of this project.

High-level idea

The idea is to build an explainable, collaboration-aware recommender that integrates into the existing ListenBrainz infrastructure in two ways:

-

As a Troi patch — a new recommendation pipeline that generates playlists automatically, just like existing Troi patches. No user action required; the system produces “Created for You” playlists with per-track explanations baked in.

-

As an LB Radio stream — a new prompt entity (e.g.

explainable:(username)) so users can blend explainable recommendations with artist/tag/stats streams, exactly like they do today with other LB Radio prompts.

Importantly, this would remain:

-

Automatic — runs as a scheduled Troi patch; users see results without doing anything

-

Explainable — every track carries a structured reason (stage, genre/era match, collaboration path)

-

Complementary — does not replace Spark CF, Troi, or LB Radio; adds a new signal alongside them

-

Open and inspectable — all scoring logic is transparent and debuggable

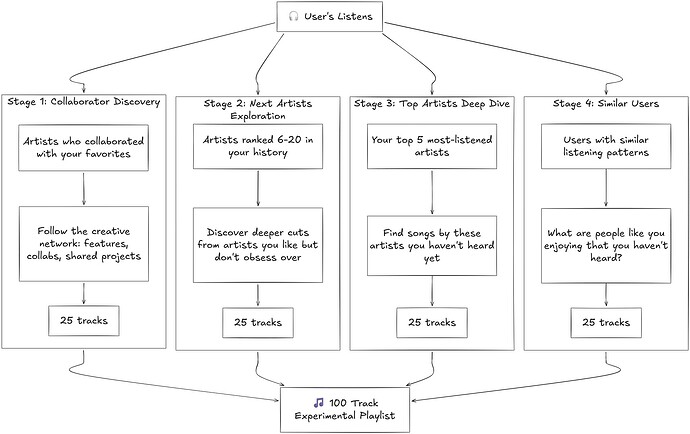

How recommendations are divided into four sections

To make the system easier to reason about (both for users and contributors), recommendations are intentionally segregated into four sections, each representing a distinct discovery strategy. In the current prototype, each section contributes 25 tracks, for a total of 100 recommendations.

This 25–25–25–25 split is a starting point, not an assumption that all users want all discovery strategies equally.

# 1. Discovery via collaborator networks

This section is based on artist relationships rather than user similarity.

-

Identifies artists who have collaborated with multiple artists the user already enjoys

-

Treats collaboration as a signal of shared musical context or scene

-

Prioritizes collaborators who appear repeatedly across the user’s favorite artists

This reflects how many people discover music organically: through liner notes, features, and shared creative circles.

Explainability: Each track carries a reason like “Collaborator of Seedhe Maut & KR$NA (KSHMR)” — you can see exactly which collaboration path led to the recommendation.

# 2. Broader exploration of familiar artists

This section expands outward to artists the user listens to regularly, but not obsessively.

-

Uses artists ranked just below the user’s top favorites

-

Treats them as a single pool rather than isolated silos

-

Limits how many songs come from any one artist to avoid dominance

The intent here is to surface depth from artists the user already likes, but may not actively seek out.

Explainability: Each track carries a reason like “Next-tier artist (Red Hot Chili Peppers)” — the user understands this is an expansion of their existing taste, not a random suggestion.

# 3. Deep dive into top artists

This section focuses on artists the user already listens to the most.

-

Looks at the user’s top few artists by listening history

-

Recommends songs by those artists that the user has not heard yet

-

Prioritizes familiarity while still encouraging exploration within known catalogs

This reflects the idea that liking an artist does not necessarily mean having explored their full discography.

Explainability: Each track carries a reason like “From an artist you love (Seedhe Maut)” — the simplest and most intuitive reason.

# 4. Similar-user discovery (future extension)

This section aligns most closely with what Troi already does today.

-

Uses listening overlap between users

-

Surfaces songs enjoyed by multiple users with similar listening behavior

-

Acts as a form of social validation

In this system, similar-user recommendations are treated as one component, rather than the sole driver.

Explainability: Each track would carry a reason like “Popular among users with similar taste” — the collaborative filtering signal becomes just one named piece of the puzzle.

Why keep these sections separate?

Keeping recommendations separated by strategy makes the system easier to explain, critique, and evaluate.

Instead of asking whether a recommendation is “good” or “bad” in isolation, users and contributors can reason about why a song appeared and whether that discovery path makes sense. This structure also makes it easier to compare strategies against each other and understand which ones users actually find useful.

When the system integrates as a Troi patch, the sections are merged into a single playlist but each track retains its structured reason in the JSPF metadata. When it integrates as an LB Radio stream, the explainable tracks are weighted and blended with other streams just like any other LB Radio source.

Scoring and configurability

The current prototype already uses an explicit weighting mechanism rather than a fixed or opaque formula.

Tracks are scored using a small set of interpretable parameters, such as:

-

genre and tag alignment

-

artist familiarity versus exploration

-

collaboration signals

-

era alignment

-

language match

These parameters are intentionally separated and tunable. This makes it feasible to expose limited user-facing configurability, such as adjusting how much era, genre, or exploration should matter, without requiring users to understand the underlying implementation.

Different users may value different sections

One important assumption this experiment explicitly avoids is that all users value all discovery strategies equally.

Some users may strongly prefer deeper exploration of artists they already love, while others may care more about collaborator-based discovery or social validation. By keeping sections independent, the system allows future experimentation where:

-

sections can be upweighted or downweighted

-

some sections can be disabled entirely

-

different defaults can be tested for different listening profiles

This kind of experimentation is difficult to explore when recommendations are produced by a single monolithic model.

Integration: Troi patch and LB Radio

A key part of this project is that recommendations are not siloed in a standalone tool — they flow into the systems ListenBrainz users already use.

Troi patch

The recommender runs as a Troi patch, meaning it generates playlists on a schedule just like existing recommendation pipelines. Users don’t need to do anything — playlists appear in their “Created for You” section with per-track explainability metadata embedded in the JSPF output. This is the primary, automatic integration path.

LB Radio stream

The recommender also exposes a new LB Radio prompt entity, such as explainable:(username) or explainable_recs:(username). This means:

-

Users can request explainable recommendations on demand

-

Explainable tracks can be blended with artist/tag/stats/recs streams, weighted exactly like any other source

-

Optionally, the UI can show which tracks in a blended playlist came from the “explainable” source

This requires:

-

A new prompt in the LB Radio prompt parser

-

A new element (e.g.

LBRadioExplainableRecordingElement) that calls the same pipeline as the standalone patch and returns recordings with reasons attached -

Tests and documentation for the new entity

The LB Radio integration is important because it shows the system “already exists” in the LB ecosystem — it extends LB Radio, rather than duplicating it.

Evaluation: how we know this adds value

To demonstrate that explainable recommendations are worth having — not just “another source” — the project includes a metrics pipeline comparing the new system against existing CF recommendations.

For a sample of users, both (1) current CF recs and (2) explainable recs are generated. We then compute:

-

Diversity: Artist/release spread, tag entropy

-

Coverage: % of recs that have MusicBrainz metadata (artist, release, tags)

-

Explainability: % of recs that have a non-empty reason (stage + at least one of genre/era/collaboration path)

The results will be published as a short report (or notebook) in the repo comparing CF vs explainable recs, with reusable scripts so future changes to the recommender can re-run the comparison.

Explainability in the API and JSPF

Explanations shouldn’t be buried in logs — they should be first-class data so the frontend can show “Why this track?”

Each Recording (or playlist track) carries a small structured reason object:

{

"stage": 1,

"stage_name": "top_artists",

"genre_matches": ["hip hop", "rap"],

"era_decade": 2020,

"collab_via": [],

"language_matches": ["Hindi"]

}

A minimal JSPF extension (under listenbrainz.org or musicbrainz.org) is defined so playlists saved or returned by the API include this per-track reason. If the LB playlist API returns JSPF, playlists from “explainable recs” include this extension; no breaking change for existing clients.

Questions for the community

I’d really value feedback on questions like:

-

Does Troi’s current similar-user approach cover most discovery needs, or are there gaps it doesn’t address well?

-

Is there value in adding per-track explainability to recommendations, or is it unnecessary complexity?

-

Does the Troi patch + LB Radio stream integration approach make sense, or would a different integration be better?

-

Does separating recommendations by discovery strategy make the system clearer or more confusing?

-

Would limited configurability help users better understand recommendations, or add unnecessary complexity?

-

How should success for this system be evaluated: engagement, feedback, listening behavior, diversity metrics, or something else?

-

Is the collaboration-based discovery (Stage 3) genuinely useful, or does similar-artist already cover that need?

Critical feedback is especially welcome.

Project roadmap

| Scope | Description | Status |

|---|---|---|

| A. Evaluation & metrics | Metrics pipeline comparing CF vs explainable recs (diversity, coverage, explainability) | Mandatory |

| B. Explainability in API/JSPF | Structured reasons in Troi output, JSPF extension, API contract | Mandatory |

| C. “Why this track?” UI | Show explanation per track in playlist detail / Created for You | Stretch |

| D. LB Radio integration | New prompt entity, new element, blend with existing streams | Mandatory |

| E. Data pipeline | Production path for listens + MusicBrainz data (LB API, MB database/API, config) | Core |

| F. Stage 4 (similar users) | Collaborative filtering stage using similar-user data from LB | Optional |

| G. Documentation & community | Design doc, user-facing docs, contributor-facing docs | Mandatory |

The core work (~40% of effort) is productionizing the POC as a Troi patch with the LB data pipeline. The remaining effort goes into evaluation, explainability, LB Radio integration, and documentation — the pieces that turn “we added a patch” into “we added a measurable, explainable feature.”

Try the POC

A working proof-of-concept is available in the recommender folder of the recommender-system branch. It runs Stages 1–3 against a local MusicBrainz database and produces a scored, explainable playlist with JSPF export — no ListenBrainz infrastructure required. Check out the README in that folder for setup instructions and sample output.

Thanks for reading, and I’m looking forward to hearing thoughts.