PrathameshG Metabrainz GSOC 2023 Proposal - Google Docs;

Name: Prathamesh S. Ghatole

Email: prathamesh.s.ghatole@gmail.com

Linkedin: https://www.linkedin.com/in/prathamesh-ghatole

GitHub: https://github.com/Prathamesh-Ghatole

IRC Nick: Pratha-Fish

Mentor: @reosarevok

Timezone: Indian Standard Time (GMT +5:30)

Languages: English, Hindi, Marathi

Abstract:

MusicBrainz is an open-source, community-maintained database of music metadata. It not only provides a comprehensive collection of information about various music artists, their releases, and related data such as recording and release dates, labels, and track listings - but also the locations (areas) related to these tracks!

MusicBrainz tracks area types like countries, cities, districts, etc. to indicate the location of recording studios, artist birthplaces, concert halls, events (concerts), etc. But considering the scope of the database, MusicBrainz refers to external databases like Wikidata & GeoNames to keep its area metadata up-to-date.

However, currently, areas are being added by manually submitting AREQ tickets on the MetaBrainz Jira issue tracker, where dr_saunders manually addresses them, and adds/updates areas on MusicBrainz. Given the manual nature of this process, it’s naturally cumbersome, causes delays, and lacks frequent updates on outdated areas.

With this GSoC project, we aim to tackle this issue by building a new Mechanize based “AreaBot” written in Python (similar to the old Perl Bot) to automatically maintain and update areas in MusicBrainz using Wikidata.

Problem Statement:

Currently, areas are mostly added manually as follows:

- Submit an AREQ ticket on the MetaBrainz Jira issue tracker.

(e.g.[AREQ-3115] Prospect Heights, Brooklyn - MetaBrainz JIRA) - Once submitted, dr_saunders manually address these tickets to create new areas on the MusicBrainz database.

(e.g.: Prospect Heights - MusicBrainz)

The above method, however, is unideal due to the following reasons:

- A tedious amount of manual editing is required.

- Adding areas in bulk is not supported.

- Areas are not being updated in real-time.

- Delays in adding new areas can cause problems for users who need to link areas when adding new recordings to the MusicBrainz database. If an area is not available to link immediately, important area metadata could be missed.

- Area data is not updated automatically and hence becomes outdated unless reported by an editor and fixed manually.

- Existing area data doesn’t automatically update localized names that are later added to the references (Wikidata, Geonames, etc).

Solution Statement:

Initially, areas were added automatically from Wikidata using the old Perl bot that was discontinued due to concerns over vandalism using malicious Wikidata edits by certain editors who wanted to ensure specific areas were added by the bot. However, Wikidata nowadays has much stronger anti-vandalism tools, potentially enabling us to resume automatic area addition using Wikidata.

As the Perl bot is complex and mostly unused, a new Python-based “AreaBot” has to be built. We believe the existing MusicBrainz-Bot could be a great start for the bot.

Some references:

- mb2wikidatabot - GitHub - metabrainz/mb2wikidatabot: A bot for importing data from MusicBrainz into Wikidata

- MusicBrainz-bot (reo’s fork)- GitHub - reosarevok/musicbrainz-bot

- OLD MusicBrainz-bot (in Perl) - GitHub - 96187/musicbrainz-bot

Given the existing Mechanize-based MusicBrainz-bot and Wikidata’s well maintained official Python libraries, we’re positive this solution is reasonable and could be finished well within the proposed 350-hour duration of this project.

Project Goals:

With this project, we propose some primary high-level goals as follows:

-

Periodically fetch new areas from Wikidata/Geonames.

-

Add new areas to the MusicBrainz database.

-

Update missing, or updated metadata for all existing areas from their linked references.

-

Write documentation for the existing MusicBrainz-Bot & the newly proposed “AreaBot”.

-

Write tests for the existing MusicBrainz-Bot & the newly proposed “AreaBot”.

Deliverables:

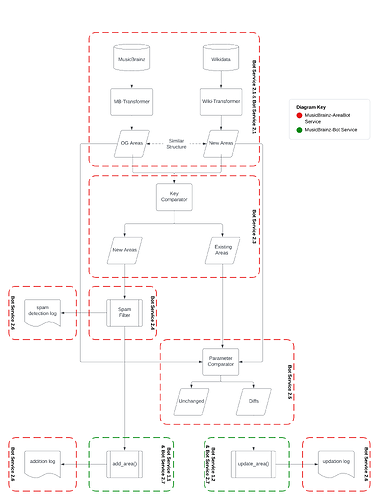

- An updated MusicBrainz-bot with complete support for adding areas.

- Bot Service 1.1: Add new areas to MusicBrainz.

- Bot Service 1.2: Update existing areas on MusicBrainz.

-

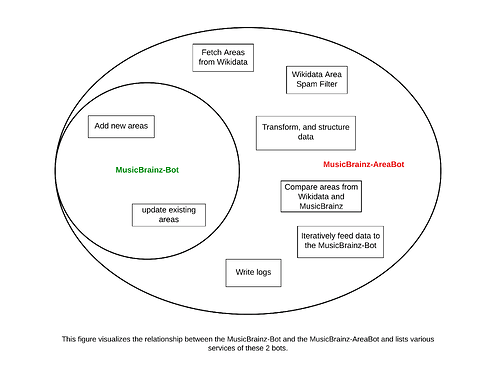

AreaBot (based on MusicBrainz-bot) to add areas to MusicBrainz.

- Bot Service 2.1: Periodically Fetch areas from Wikidata.

- Bot Service 2.2: Transform Wikidata and MusicBrainz area data into a similar structure.

- Bot Service 2.3: Compare Wikidata and MusicBrainz areas. Return a list of “new areas”, and “existing areas”.

- Bot Service 2.4: Wikidata Spam filter.

- Bot Service 2.5: Parameter comparator - Compare parameter differences in existing area data. Create a list of areas with updated parameters.

- Bot Service 2.6: Log Writer - write logs for added/updated areas and spam areas.

- Bot Service 2.7: Data Feeder - Iteratively Feed Data to the MusicBrainz-Bot

- Documentation & Tests for the AreaBot

- Documentation & Tests for MusicBrainz-bot

Process Flowchart

Bot Structure

Progress So Far:

MusicBrainz-Bot (My Commits | My PRs)

So far I’ve worked on adding an “add_area” function to the MusicBrainz-Bot (Including parameters like references/external-links, ISO codes, etc.); the output for which is currently being tested on https://test.musicbrainz.org/.

e.g.: test_area_name_type_note_ISO_list_ext_link_list - MusicBrainz

Currently, the code for add_area() is up and running with some minor refactoring required.

AreaBot (My Commits)

I have also started working on the AreaBot, which uses the MusicBrainz-bot at its core (as a submodule, not as a fork). I am also using the mb2wikidatabot as a reference to structure this bot.

AreaBot is a standalone bot that includes various scripts to periodically fetch, compare, transform, and update area data using Wikidata. It also includes wrapper scripts to orchestrate the MusicBrainz-Bot to iteratively add the collected areas.

[Please Refer to the “Deliverables” section for more info]

So far I’ve made 14 commits to this project, writing driver code to call the MusicBrainz-Bot to post test data on test.musicbrainz.org, as well as running various analytics notebooks for comparing areas from Wikidata and the MusicBrainz database.

This bot would be potentially deployed and maintained using Docker.

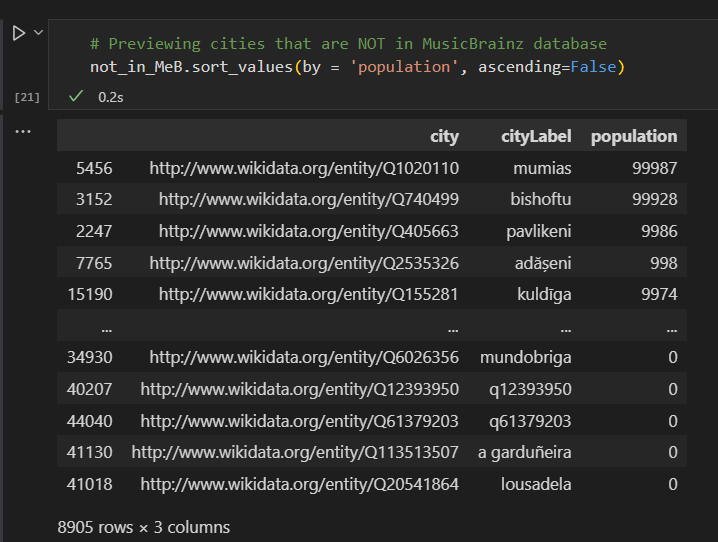

Wikidata & MusicBrainz area coverage comparison & Spam Detection

The following Notebook in the MusicBrainz-AreaBot repository is a simple exploratory notebook that aims to fetch a subset of areas from Wikidata and compare them to existing areas in the MusicBrainz database.

On a small sample dataset with 44107 cities, So far, I’ve been able to identify 8905 cities from Wikidata that are NOT present in MusicBrainz. A pretty good start!

As observed above, we can see these missing areas include large cities as well as some malicious locations that weren’t added for a good reason.

However, we can filter out these areas based on their population metric. Cities with 0 population are most likely to be fake. But let’s take this a step further and filter out all cities with < 1000 population. This gives us 7268 cities that look pretty legit from the look of it!

To ensure we don’t include spam, we’d implement a spam filter for Wikidata areas with more parameters to filter out spam areas from the good ones. However, I propose we keep it as a stretch goal for now, keeping the time limitations of this project in mind.

Testing SPARQL queries for Wikidata:

As a learning exercise, I’ve been testing out relevant SPARQL queries for fetching areas from Wikidata in the following Jupyter Notebook: MusicBrainz-AreaBot/TEST_wikidata_queries.ipynb at master · Prathamesh-Ghatole/MusicBrainz-AreaBot · GitHub

(Please find the queries for the above Screenshots in the Notebook linked above.)

Project Timeline:

A detailed 12 Week timeline for the duration of Google Summer of Code.

Community Bonding Period (May 4 - May 28):

- Given my unfortunate University End Semester Examination schedule, ALL of my community bonding period duration would be engaged in my university commitments from May 5th - May 29th.

- However, I do plan on spending time getting to better know my project even before the community bonding period starts (early April). This includes:

- It’s crucial to determine the extent of malicious data in Wikidata and find ways to filter out the malicious data. This would be the first priority before starting out the project.

- Catching up on relevant documentation & reference bots.

- Writing tests and docs for the MusicBrainz-bot

- Familiarizing myself with SPARQL for phase 2 of the project.

- Continuing my ongoing PR on adding areas functionality to the MusicBrainz-bot (added method add_area in class MusicBrainzClient (editing.py) by Prathamesh-Ghatole · Pull Request #1 · metabrainz/musicbrainz-bot · GitHub)

- Learning about ways to automate script execution and bot deployment using Docker, and potentially using tools like Apache Airflow and Kafka.

Phase 1 (May 29 - July 14):

-

Week 1 (May 29 - June 04)

- Complete Bot Service 1.1

- Implement Bot Service 1.2

- Write tests and docs for the above services.

- Write tests and docs for the MusicBrainz-bot

-

Week 2 (June 05 - June 11)

- Explore SPARQL queries to give the best range of area data with a wide range of attributes to be added along with the area name.

- Write queries for the following location types on priority:

Countries, States (subdivisions), Cities, & Districts.

-

Week 3 (June 12 - July 18)

- Implement Bot Service 2.1 - Write a script to fetch data from Wikidata and MusicBrainz based on previously decided queries.

- Implement Bot Service 2.2 Write a script to transform the above datasets into a similar structure.

- Write tests and docs for the above services.

-

Week 4 (June 19 - July 25)

- Implement Bot Service 2.3 - Write a script to compare newly fetched areas with areas already in MusicBrainz, and return a list of “new areas”, and “existing areas”.

- I believe the performance of this particular script could vary highly based on implementation, so I’d like to take a little more time with this one to make sure it works well.

-

Week 5 (June 26 - July 02)

- Write tests and docs for the above service

- Code Review and refactoring.

- Getting ready for mid-term evaluations.

- July 29 - MetaBrainz Perl bot’s 11-year anniversary

Phase 2 (July 03 - Aug 28):

-

Week 6 (Jul 03 - Jul 09)

- Implement Bot Service 2.5 - Implement a script to compare parameter differences in existing area data. Create a list of areas with updated parameters.

- Write tests and docs for the above service.

-

Week 7 (Jul 10 - Jul 16)

- Implement Bot Service 2.5 - Implement a logging service for Bot Service 1.1, 1.2, and 2.4.

- 15th - 16th Jul - Examinations

-

Week 8 (Jul 17 - Jul 23)

- Write tests and docs for the above service (Bot Service 2.5).

- Code Review and Refactoring.

-

Week 9 (Jul 24 - Jul 30)

- Implement Bot Service 2.5 - Write driver code for the MusicBrainz-Bot to iteratively add new areas to the MusicBrainz database.

- Write tests and docs for the above service.

-

Week 10 (Jul 31 - Aug 06)

- 1st - 6th Aug- Examinations (ESTIMATED)

-

Week 11 (Aug 07 - Aug 13)

- Continue implementing Bot Service 2.7 - Write driver code for the MusicBrainz-Bot to iteratively update areas with new metadata from Wikidata.

- Write tests and docs for the above service.

- Write tests and docs for the bot.

-

Week 12 (Aug 14 - Aug 20)

- Orchestrate all the scripts together. Potentially using Apache Airflow and Kafka.

- Use Docker for deployment.

- Thoroughly test if all the components are working together properly.

- Set up notifications to indicate bot failures.

- Aug 20th - Leave for my birthday (maybe?)

-

FINAL WEEK (Aug 21 - Aug 28)

- A final round of code reviews and quality checks.

- Complete README for the MusicBrainz-AreaBot

- Write and submit the final GSoC Blog.

Why would I like to work with MetaBrainz?

The opportunity to work with MetaBrainz last year has been one of the BEST work experiences of my life. With the GSoC ‘22 project “Cleaning the Music Listening Histories Dataset”, I’ve had the opportunity to contribute to a cause I care about, made a ton of new friends, got mentored by some of the best thinkers & executers in the field of Music Technology, and even earned my first pay cheque! After looking out for more relevant projects in this community for the past few months, I think I’ve finally run into a project that’s interesting, impactful, and resonates with my skills and learning goals.

Along with my whole teen life revolving around technology, I’ve spent quite some time trying to learn the ins and outs of making music and consuming music like a maniac.

Throughout this journey, MetaBrainz has been an omnipresent helping hand; be it with tagging & identifying downloaded music with Picard, or powering sites like last.fm even before I knew the name MetaBrainz!

Even since my music production days, I’ve been bugged by the lack of standardization in the music metadata space. Now, even more so since I’ve completely indulged myself in this domain. Thankfully, the MetaBrainz community has been infinitely helpful throughout this journey, and at this point, I’d be more than happy to give back something and learn a lot more about this very niche domain that interests me; especially when my goals & values seem to align really really well with the community. I plan on continuing my contribution to MetaBrainz well after my tenure with GSoC ends.

Some Questions as asked on the Official MetaBrainz GSoC template:

- I am currently rocking an Asus TUF Dash F15 laptop (i5 12450H + 16 GB RAM + RTX 3050) as my primary machine.

- Thanks to the amazing MetaBrainz community, I also have access to the community server “Wolf”, and I’ve also previously worked with “Bono”.

- I started out in 6th grade by writing batch scripts on windows

- Moved on to cybersecurity in 9th grade

- Took a pleasant turn in 10th grade toward music production

- Now for the past 3 years, I’ve been studying Data Science, Data Engineering, AI, and its applications in the world of Music, Metadata analysis, feature extraction, and related Social Networks.

- Back in 2022 I finally set my first step into the Music Tech industry and worked as a GSoC contributor here at MetaBrainz on “Cleaning the Music Listening Histories Dataset” project

- You can find my music taste here: snaekboi - ListenBrainz

- I have a weird obsession with Japanese rock, post-hardcore, Progressive-rock, Math-rock, Tech House, Progressive House, Hardstyle, Lo-Fi Hip-hop, Orchestral Music, Piano Solos, and a total hotchpotch of a lotta weird genres!

- E.g. (recording MBIDs):

cba64bc9-28cf-447e-9625-4f079c06ec23, 2259521d-11ab-4bde-95bb-0942b2f42e32, 256a6018-21d1-4de2-af70-3532206f5ee5, f45fb199-97eb-4732-8c55-79185e22b457, f2d5d66f-cf72-4e04-b913-9da2cfa1affb

- I love working with Music Technology, Data Engineering, Analytics, and Machine Learning.

- Therefore, I am most interested in MusicBrainz & ListenBrainz nowadays.

- Yes, I love MusicBrainz Picard, and I’ve used it to tag every single track in my 20 GB offline music library

- Yes, as discussed above, I have previously contributed to Google Summer of Code in 2022, and have been active in Open Source communities ever since!

- I have also previously built personal projects like Last.fm Scraper which aims to scrape data from Last.fm/ListenBrainz, apply my own computations with the MusicBrainz/Spotify API, and provide the user with a rich set of world-class metadata features for personal use/analysis/archival.

- My interests mostly lie in the domain of Music Technology, Data Science, Data Engineering, Data Analysis, and Machine Learning, and I aim to build all my projects related to these domains.

- Some of my favorite personal projects are: Last.fm scraper ; Document Topic Modelling ; Portfolio Site

- I’d be able to find ~35-40 hours weekly for my GSoC activities with MetaBrainz.

- Just like last time, I’d also be attending to my university commitments from 17th June onwards. However, I’d be ending my extra commitments from college clubs and teaching assistant activities to make space for this project.

However, given my peculiar dual degree scenario, I’d have to take some time off for my examinations around the following dates:

Jul 15th -16th, Aug 1st - 6th (Estimated)

About Me:

Hi, I am Prathamesh, an aspiring Data Engineer based in Pune with a sheer obsession for data, music, computers, cats, and open-source software.

Back in 4th grade, I started tinkering around with computers and developed a severe passion for technology, making it a highlight of most of my teen life. 6 years ago I developed a similar passion for making & consuming insane amounts of music. Given my 3+ years of experience in Music Production, playing Piano, & Audio Engineering under my artist alias “SNÆK” & a life-long love for computers, my passion for the world of music and technology has now convolved into a passion for Data and AI involving various audio Technologies!

I have previously worked with the MetaBrainz Foundation as a Google Summer of Code ‘22 contributor, and as a 3rd-year undergrad, I am currently pursuing a BS in Data Science and applications at IIT Madras and a BTech. in AI at GHRCEM Pune, where my friends know me for my enthusiasm & weird sense of humor, and my teachers know me for my perseverance and reliability.

Skills & Certifications:

-

Technical Skills: Data Engineering, Data Analytics, API Scraping, Machine Learning, Web Automation.

-

Tools: Python (Pandas, Matplotlib, scikit-learn, PyArrow, Numba, Requests, Multiprocessing, Mechanize), SQL (PostgreSQL), Git, Linux, Tableau, HTML, CSS, Hugo.

-

Interests: Technology, Music Production, Playing Piano, Reading, Memes, Anime.

-

Complete CV:

-

Relevant Certifications:

-

Git from Basics to Advanced - Practical Guide for Developers - Udemy

-

Applied Data Science Specialization - Coursera

-

Python 3 Specialization - Coursera